How I Built Persistent Memory for AI Using TypeScript and Markdown

Cross-platform. TypeScript strict mode. 30 E2E tests. Multi-OS CI. Independent technical review. Built in less than 24 hours, with AI.

This is the story of the build. What happened in the following days is another story.

The pain that woke me up in the morning

If you use Claude, Codex, or any AI assistant for programming, you’ve lived this. Every session starts the same way: paste context, repeat rules, reinforce decisions already made, warn about what cannot be done, explain the project’s patterns one more time. No matter how good the AI is, it always starts from zero.

“Ah, but just keep a CLAUDE.md, GEMINI.md, etc.” Yes, you can do that. But what can a single file really represent in a living project, with history, accumulated decisions, and current state? In the end, it’s like working with an extremely capable developer, but with a goldfish memory.

I had already been trying - and managing, with a lot of manual work — to solve this for months using hand-written Markdown. It worked, but it was rough. Every new project felt like starting from scratch, as if I were carving important decisions into stone every time.

The problem was never the AI. It was the lack of persistent memory, real history, and decisions that don’t get lost between sessions.

The idea: external memory for AI

After shaping and organizing the ideas in my head for a while, at 8 a.m. I woke up decided — it’s like those days when you wake up possessed by the urge to clean the house — everything was clear enough to turn into code.

That’s how AIPIM - AI Project Instruction Manager was born. It used to be AI Project Manager, then AIPM, then… through a random but valuable insight, AIPIM. Not as hype, but as a practical tool. The proposal is simple: create an external memory layer between the project and the AI assistant.

This layer solves two core pains: keeping persistent context between sessions and recording the real project history, including decisions, tasks, and current state. All of this is organized in a .project/ structure, entirely in Markdown, which the AI can read, respect, and follow.

context.mdbecomes the source of truthcurrent-task.mddefines the focusdecisions/stores immutable architectural decisionsbacklog/withcompleted/records work honestly

I was already using exactly this methodology before AIPIM existed. The difference is that now it became an automated CLI.

How I worked with AI (for real)

I didn’t use passive autocomplete. I used Claude Code as a pair programmer. I defined the direction, the AI executed, I reviewed everything, fixed a lot of things, and nothing passed without full understanding. There was no “accept suggestion.” There was technical dialogue.

A real example: I asked for a framework detector. The AI proposed heuristics using package.json and the filesystem. I pointed out edge cases, like React with Vite versus CRA, and demanded hierarchical fallback. The flow was continuous, no context switching, always with human decisions in command.

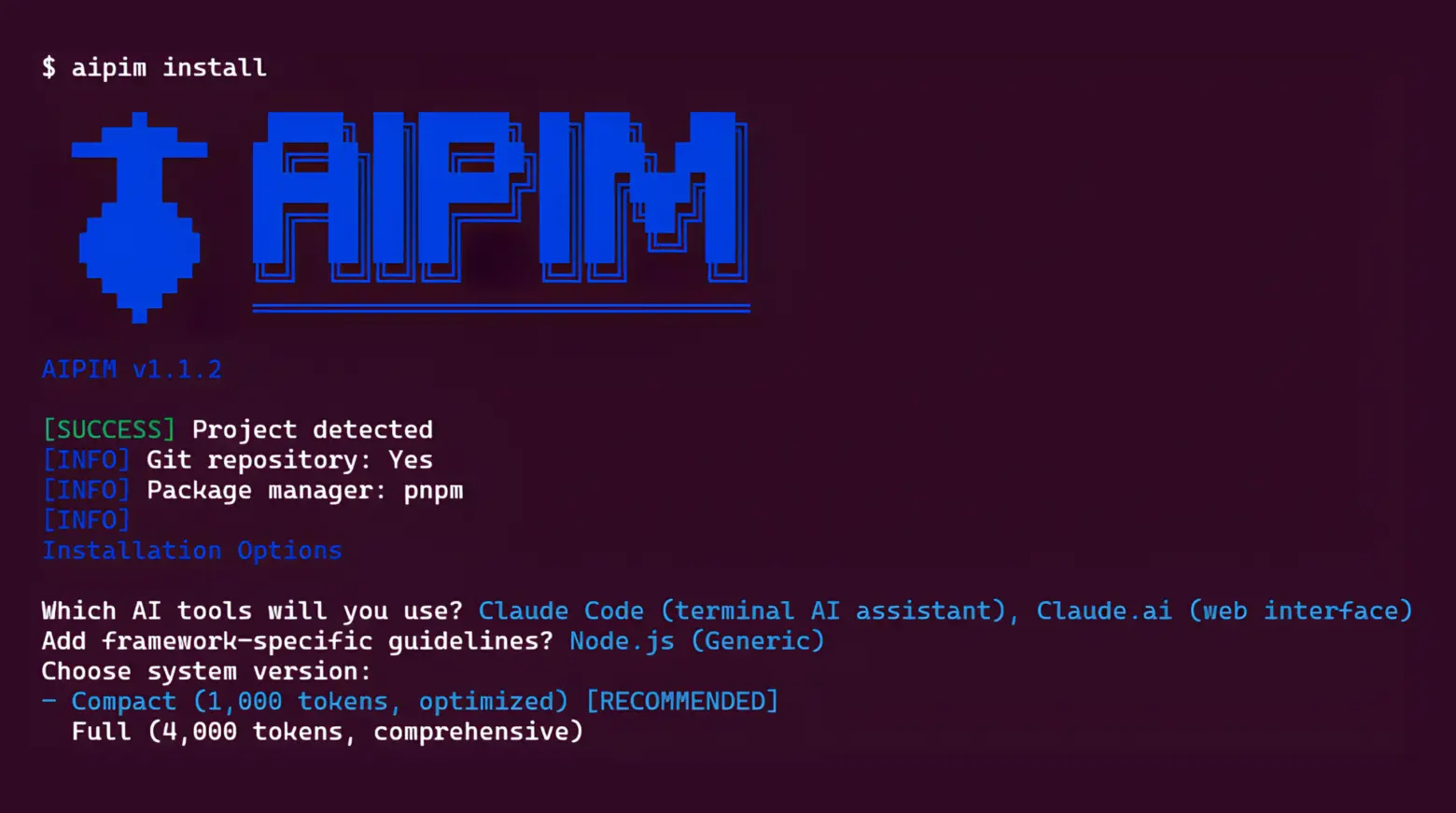

By the afternoon, the first aipim install worked

Near the end of the afternoon, the first milestone was standing: a functional CLI. It wasn’t magic. There were bugs, tweaks (my goodness, many of them — if Claude had ears, they’d be hurting), and review. AI leaves traces, especially in complex tasks, and someone needs to follow those traces carefully. From the start, I made it clear that the project needed to be cross-platform, with real error handling like EACCES and ENOSPC, working on Windows, macOS, and Linux.

Updates without overwriting customization

This was a point where AI alone wouldn’t solve it. The problem was updating base templates without destroying user customizations. The obvious suggestion was to use git diff. My answer was simple: what if there’s no git? What if the repository is dirty?

The solution was architectural. Each generated file receives a cryptographic SHA-256 signature embedded as a comment. If the hash matches, the file is pristine and can be updated. If it diverges, it was modified and must be preserved. Claude implemented it perfectly, but the decision was human. This point, by the way, was one of the most praised in the technical review, compared to strategies used in Kubernetes manifests.

Tests: where AI really shined

I don’t like writing tests (although I do write them), and here the AI was absurdly good. I asked for unit tests for the detector and, in minutes, there were 17 cases covering all frameworks. Then I asked for more: 30 cross-platform E2E scenarios. Tests came for installation, update, validation, and task lifecycle, running on Linux, Windows, and macOS in CI. That would have been days of manual work.

It took just a few hours.

TypeScript Strict, no compromises

Here I was picky, but I’d be with myself anyway. Strict mode, zero any. The rule was explicit and the AI respected it. In the technical review, the type safety score was 95/100.

The Brutal and Honest Feedback

The strengths were clear: clean architecture, the SHA-256 signature system, 30 cross-platform E2E scenarios, and strict TypeScript. The red flags were fair too: a diff command announced but not implemented, a dependency installed and not used, and too few tests in the updater, a critical module.

The final score was 88/100.

What I Learned in These First 24 Hours

AI doesn’t replace judgment; it executes. Tests stopped being an excuse; in 2026, not having tests is a choice. And speed doesn’t have to kill quality when there’s process.

Numbers, 24 Hours Later

There were about 2,000 lines of core code and 2,000 lines of tests, 30 E2E scenarios, strict TypeScript without any. Without AI, this would have taken one to two weeks. With AI, it took about 24 real hours — including a trip to the mall with my family in the middle of the day. The project was functional, tested, and published to npm. Exactly as I wanted: an evolved version of my old Jurassic Markdown manager.

What Came Next

In the following days, I used AIPIM to manage AIPIM itself:

- I documented nine sessions

- Completed 31 tasks

- Wrote eight ADRs

- Fixed all 16 red flags

- Raised the score from 88 to 90

But that’s for a next post, because this one has already crossed the healthy longform limit (even though I enjoy it).

For Those Who Want to Go Further

The complete technical report is available in the repository, generated with Claude Code (Sonnet 4.5), and can be read in full. AIPIM is already on npm and on github.

Usage is simple and explained in the README.